If you’ve been anywhere near AI circles lately, you’ve probably seen the name OpenClaw floating around — sometimes described as an “autonomous agent,” sometimes as a successor to tools like MoltBot or ClawDBot.

But what is OpenClaw, really?

Is it another chatbot? A database wrapper? A developer tool? A research project? A threat to traditional SaaS?

Short answer: It’s an open, autonomous AI agent framework designed to take actions — not just generate text — across tools, data systems, and environments.

Long answer? That’s where things get interesting.

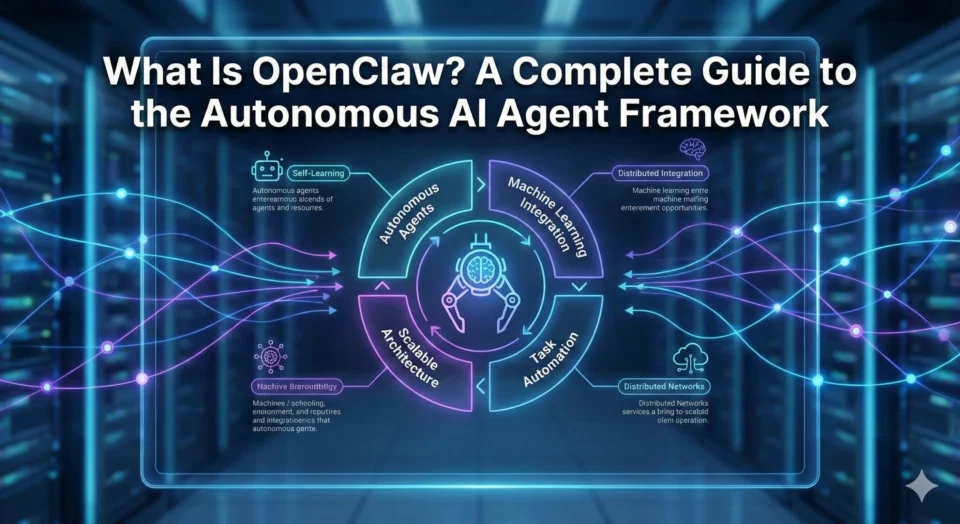

What Is OpenClaw?

OpenClaw is an open-source autonomous AI agent framework designed to plan, reason, and execute tasks across connected tools and data systems with minimal human intervention.

Unlike traditional chatbots that simply respond to prompts, OpenClaw is built to:

- Interpret goals

- Break them into sub-tasks

- Access relevant data sources

- Execute multi-step actions

- Adjust based on outcomes

In other words, it moves from “AI that answers” to “AI that acts.”

That shift — from passive assistant to active agent — is the core idea behind OpenClaw.

Do you know: How To Make A Chatbot Using Natural Language Processing?

Why OpenClaw Exists?

Most AI systems today still depend heavily on:

- Structured prompts

- Pre-defined workflows

- Manual intervention

- Static data

But real-world tasks are messy.

For example:

- “Analyze last quarter’s sales data and suggest pricing adjustments.”

- “Scan our support tickets and identify recurring product complaints.”

- “Monitor competitor announcements and summarize key changes weekly.”

These require:

- Data retrieval

- Context awareness

- Multi-step reasoning

- Tool integration

- Decision logic

OpenClaw was built to operate in that messy middle — between pure LLMs and full automation systems.

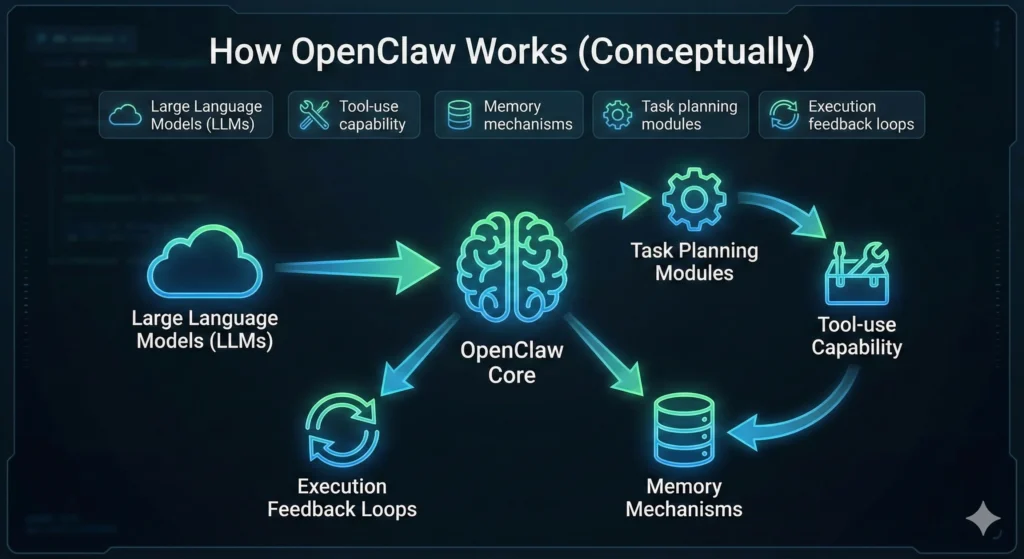

How OpenClaw Works (Conceptually)?

At a high level, OpenClaw combines:

- Large Language Models (LLMs)

- Tool-use capability

- Memory mechanisms

- Task planning modules

- Execution feedback loops

Let’s break that down.

1. Goal Interpretation

You give the agent a high-level objective.

Example:

“Identify underperforming marketing campaigns and recommend optimizations.”

The system parses intent — not just keywords.

2. Task Decomposition

The agent breaks the request into smaller steps:

- Retrieve campaign performance data

- Define performance thresholds

- Compare metrics

- Identify outliers

- Generate optimization suggestions

This decomposition is where autonomous agents differ from simple prompt-response systems.

3. Tool Interaction

OpenClaw can interact with:

- Databases

- APIs

- Vector stores

- External services

- Internal dashboards

Instead of hallucinating answers, it can actually query systems.

4. Memory + Context Retention

It stores intermediate results, tracks progress, and can revisit earlier steps.

This makes long, multi-stage tasks possible.

5. Iterative Execution

If something fails — a data fetch, a malformed response — the system can retry, adjust, or request clarification.

That loop is what makes it “agentic.”

Do you know the differences: Agentic AI vs Generative AI vs Traditional AI

Is OpenClaw Just Another AI Agent?

Not exactly.

OpenClaw is positioned as:

- Open-source

- Modular

- Framework-oriented

- Designed for customization

That makes it different from proprietary AI agents embedded in SaaS platforms.

It’s closer to infrastructure than product.

If ChatGPT is a polished assistant, OpenClaw is more like a programmable executive assistant engine developers can adapt.

OpenClaw vs Traditional AI Tools

| Feature | Chatbots | Workflow Automation | OpenClaw |

|---|---|---|---|

| Text generation | ✅ | ❌ | ✅ |

| Tool execution | Limited | ✅ | ✅ |

| Multi-step planning | ❌ | Predefined only | ✅ Dynamic |

| Autonomy | Low | Conditional | High |

| Open-source | Rare | Mixed | Yes |

The key differentiator is dynamic planning.

Workflow tools follow scripts.

OpenClaw creates them on the fly.

Why OpenClaw Matters in 2026?

Autonomous agents are becoming the next layer of AI infrastructure.

Companies are moving from:

- “How do we use ChatGPT?”

to - “How do we let AI handle entire operational processes?”

OpenClaw represents that shift.

And more importantly, it highlights a larger trend:

Data readiness now matters more than model quality.

Because an agent is only as good as:

- The systems it can access

- The permissions it has

- The structure of your data

- The guardrails you set

If your internal data is messy, fragmented, or siloed, even the smartest agent struggles.

Common Use Cases for OpenClaw

Here’s where organizations are experimenting:

1. Internal Data Agents

- Query internal knowledge bases

- Summarize policy updates

- Track KPIs automatically

2. DevOps & Engineering Support

- Monitor logs

- Flag anomalies

- Suggest fixes

3. Sales & Marketing Automation

- Analyze campaign performance

- Generate reports

- Trigger follow-ups

4. Research & Competitive Intelligence

- Scrape structured sources

- Compare updates

- Generate briefs

Notice the pattern?

These are cross-system tasks, not single-database queries.

Is OpenClaw Safe?

This is the uncomfortable question.

Autonomous agents introduce new risks:

- Over-permissioned access

- Data leakage

- Unexpected actions

- Hallucinated execution steps

- Cascading errors

Security with agent frameworks must include:

- Permission scoping

- Audit logging

- Sandboxed execution

- Human approval checkpoints

- Strict tool access policies

In practice, OpenClaw is powerful — but it should not be deployed casually.

OpenClaw vs Other AI Agent Frameworks

OpenClaw is part of a broader ecosystem of agentic systems.

Comparable categories include:

- Research-driven autonomous agents

- Tool-augmented LLM frameworks

- Multi-agent orchestration systems

Its differentiation lies in its openness and modularity — rather than being tightly coupled to a single vendor’s ecosystem.

That matters for:

- Enterprises avoiding lock-in

- Developers building custom internal systems

- Teams experimenting with agentic automation

Who Should Pay Attention to OpenClaw?

Not everyone.

If you’re a casual ChatGPT user, OpenClaw probably isn’t for you.

But it’s highly relevant if you are:

- A CTO evaluating AI infrastructure

- A data engineer building internal AI tooling

- A startup experimenting with agent-based automation

- A product team designing AI-native workflows

It’s less about using OpenClaw directly and more about understanding what it represents.

The Bigger Picture: Agents, Not Prompts

We’re moving from:

Prompt engineering → System orchestration.

That shift changes:

- How AI products are built

- How data is structured

- How workflows are automated

- How companies think about AI readiness

OpenClaw is one example of that transition.

Not the only one.

But a visible one.

Final Thoughts: What OpenClaw Really Is?

At its core, OpenClaw is:

- An open autonomous AI agent framework

- Designed for multi-step task execution

- Built around tool interaction and dynamic planning

- Geared toward developers and infrastructure teams

It is not:

- A consumer chatbot

- A plug-and-play SaaS app

- A magic automation switch

It’s infrastructure.

And like most infrastructure tools, its power depends entirely on how well you implement it.

TL;DR

OpenClaw is an open-source autonomous AI agent framework that can plan, reason, and execute multi-step tasks across tools and data systems with minimal human intervention.

It represents a broader shift toward AI agents that act — not just respond.

And whether it becomes a long-term standard or an evolutionary stepping stone, one thing is clear:

The future of AI isn’t just conversational.

It’s operational.

FAQs About OpenClaw

Yes. OpenClaw is open-source, meaning the framework itself is free to use. However, you may incur costs for infrastructure, APIs, hosting, or large language model usage depending on how you deploy it.

Yes. OpenClaw is designed for developers and technical teams. Setting it up typically requires knowledge of APIs, system architecture, and AI tooling.

It depends on your setup. If configured with local models and local data sources, it can operate offline. However, most real-world implementations rely on cloud-based APIs and external services.

Most agent frameworks like OpenClaw are primarily built in Python, though integrations may support other languages depending on APIs and system architecture.

No. OpenClaw is an agent framework, while ChatGPT is a conversational AI product. OpenClaw can use large language models (including ones similar to ChatGPT) as part of its reasoning engine.

Technically yes, but it may be overkill for small teams without engineering resources. It’s better suited for organizations building custom AI automation systems.

That depends on implementation. Since it’s open-source, data storage is controlled by whoever deploys it. Organizations must configure their own storage, security, and compliance policies.

RPA follows fixed, rule-based scripts. OpenClaw dynamically plans and adapts tasks using AI reasoning, making it more flexible but also more complex.

Yes. It can integrate with databases, APIs, and other structured data sources, depending on configuration and permissions.

It depends on the maturity of the deployment and safeguards in place. Open-source frameworks often require customization, testing, and security hardening before enterprise production use.

In theory, yes. In practice, it’s more commonly used for organizational workflows rather than individual task management.

Yes. Many autonomous agent frameworks allow multiple agents to collaborate, delegate subtasks, or validate each other’s outputs.

Industries such as fintech, SaaS, cybersecurity, e-commerce, and research organizations are exploring autonomous AI agents for operational automation.

Implementation time varies. A basic prototype may take days, while enterprise-grade deployment with security and integrations can take weeks or months.

No system is secure by default. Security depends on configuration, access controls, sandboxing, monitoring, and human oversight.